From October 26-30, 2020, Bianca Herlo, Fieke Jansen, and Marieke van Dijk held the compact seminar „Adversarial Design – Dressing for the Surveillance Age“ at the Berlin University of the Arts.

The class focused on the use of technologies that monitor and surveil us, which are implemented without much public debate. They come at a great expense of our human rights and more specifically are disproportionately impacting racialized, gendered and poor communities. To counter these negative impacts and shape a more positive agenda, the practice of resistance has been a crucial force to include values such as openness, the commons, privacy, equality, autonomy, and solidarity in technology and policy.

The seminar included presentations from Fieke Jansen on “Justice and Bias” and “Tech Context and Practice of Resistance”, Marike van Dijk on “Design Research and Tooling” and Bianca Herlo on “Digital Sovereignty.” In addition, the course was rounded off with input from external guests. Adam Harvey discussed the risks of facial recognition and introduced his research and artistic work. Denisa Kera presented on ““Motley” Design for the Age of Algorithmic Feudalism” and Ariel Guersenzveig on “Ethics and Artificial Intelligence”.

The aim of the seminar was to develop a nuanced attitude towards the subject and to develop ideas for low-tech design and fashion interventions that play with the weaknesses of invisible surveillance technologies. Four groups of students pioneer design that puts privacy, solidarity, equality and the human at the center.

Group 1: Paranoia Box

Patricia Wagner, Athena Grandis, Anna Ryzhova

Issue

We use multiple electronic devices and an infinite number of apps to enhance and support us in every single aspect of our daily life. People are not aware that by using these apps, private data is collected that holds important information about them. This data can be exploited by companies and governments.

Sadly, the discussion about data security seems to be too difficult to be understood by someone without tech knowledge. Therefore, people often have an attitude of indifference towards data security.

Project

The group believes that by addressing the topic “data security” in a humorous way, they can bring it closer to people, thereby creating more awareness.

Paranoia-Box is a vending machine that stands in public spaces and is accessible to all.

It works as follows: (1) You pay at the machine with your credit card in order for the machine to trace back your personal data. (2) The credit card allows the machine to read your personal data (Amazon shopping, holidays, Apple Apps ect.) (3) The machine produces an object that corresponds to your personal data. (4) The vending machine produces an object, doll or Chindogu object (to be chosen by the user), which reflects the user’s own personality. (Chindogu is a practice of invention of ingenious everyday gadgets that seem to be ideal solutions to particular problems, but which may cause more problems that they solve.)

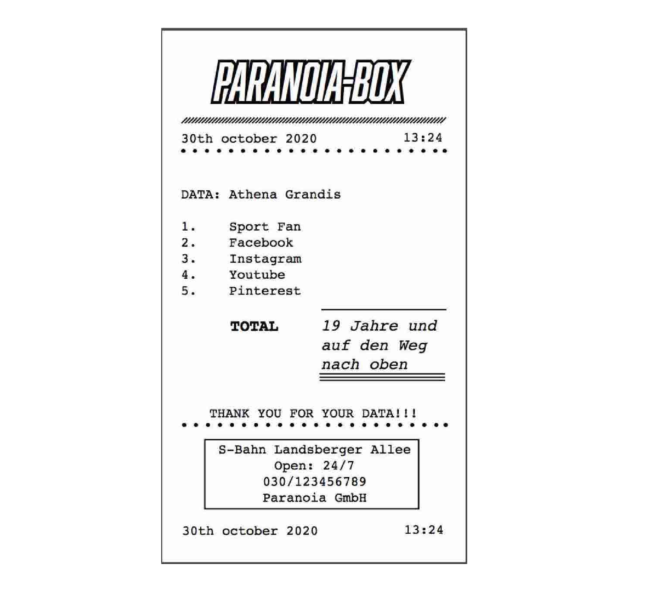

With the object further two things come out of the machine: (1) A Chinese fortune cookie, with a suggestion on how you can better protect your data. (2) A receipt listing which data was used to produce the object.

Group 2: Face Recognition Protection

Hyein Poy, Laura Talkenberg, Ivana Pavlickova

Issue

In a not so distant imaginary future, the individual has no protection from facial recognition surveillance systems used by the government and capitalist companies. These surveillance systems inherently reproduce and multiply biases that are anchored in our society.

Project

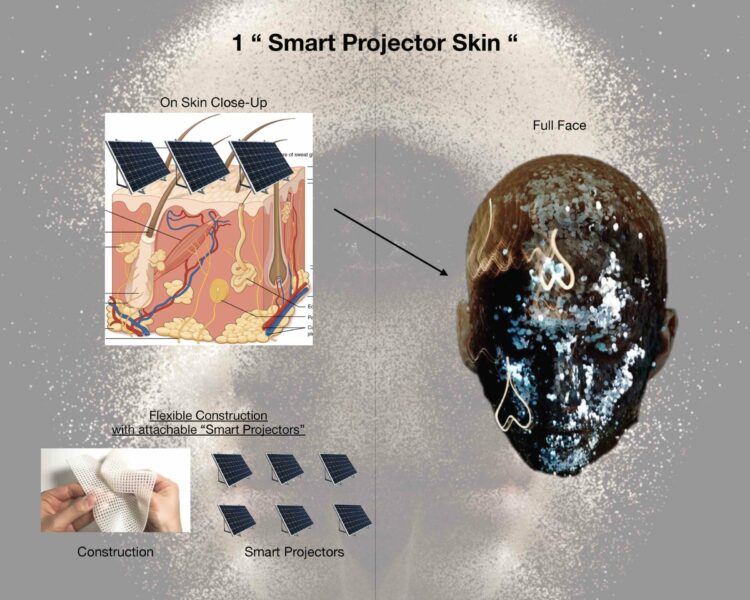

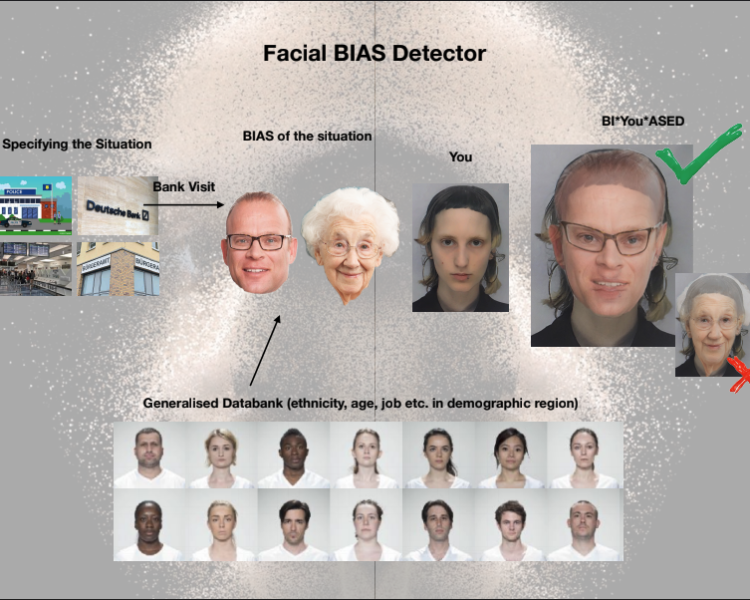

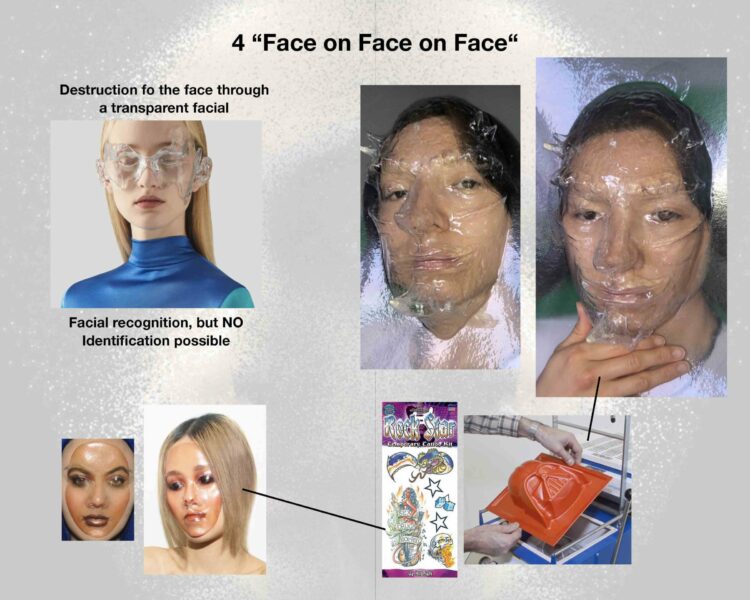

The group developed six ideas on how to protect oneself from facial recognition surveillance systems: (1) Smart Projector Skin, (2) Facial BIAS Detector, (3) Mirror Surroundings Option, (4) Make-Up Application, (5) Micro Mirror Makeup and (6) Face on Face on Face.

The application for the face either recognises the bias of the surveillance technology in place and helps the user take advantage of it, or generally helps the user hiding from it.

The goal is to empower the individual and to create a tool that undermines the bias.

Group 3: The Ultimate Analogue Experience

Nataliya Susyak, Katherina Brackemann, Mia Gorte

“Winston stopped reading, chiefly in order to appreciate the fact that he was reading, in comfort and safety. He was alone: no telescreen, no ear at the keyhole, no nervous impulse to glance over his shoulder or cover the page with his hand. (…) It was bliss, it was eternity.” – George Orwell, 1984

Issue

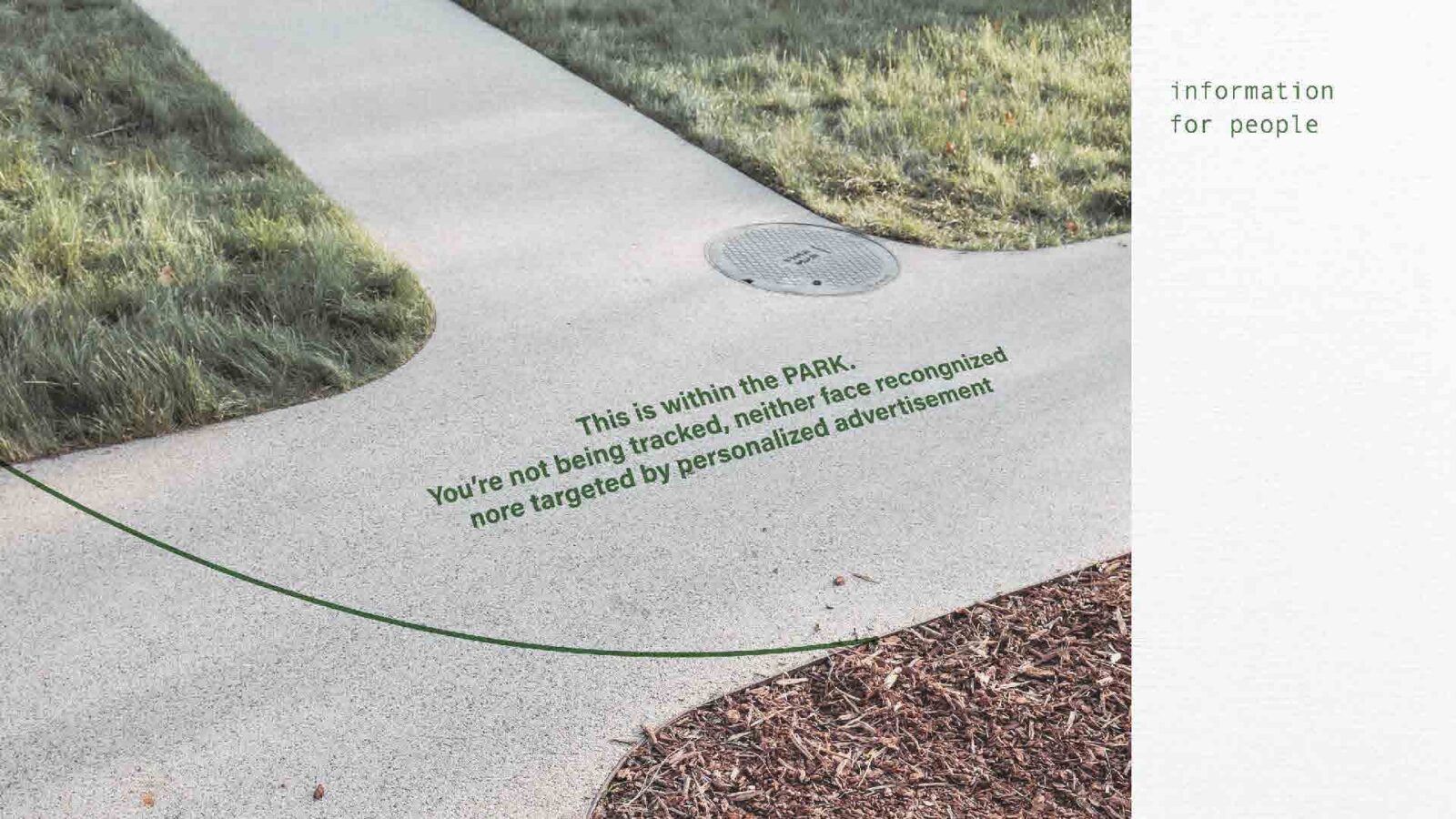

Today it is practically impossible to be offline. Even if one turns off all technological devices, one is still surrounded by reception signals of different kinds. Where is the right to not be tracked in the physical space in the city? What does offline mean? When are you really offline?

Privacy is a human right; it is an important indicator for trust and secure communication, it can be used as protection from pressure, discrimination, manipulation of attitude, and behaviour.

Instead of developing an application that pursues the idea of disconnecting, the group believes that there must be an answer to a technology in a not technological way.

Project

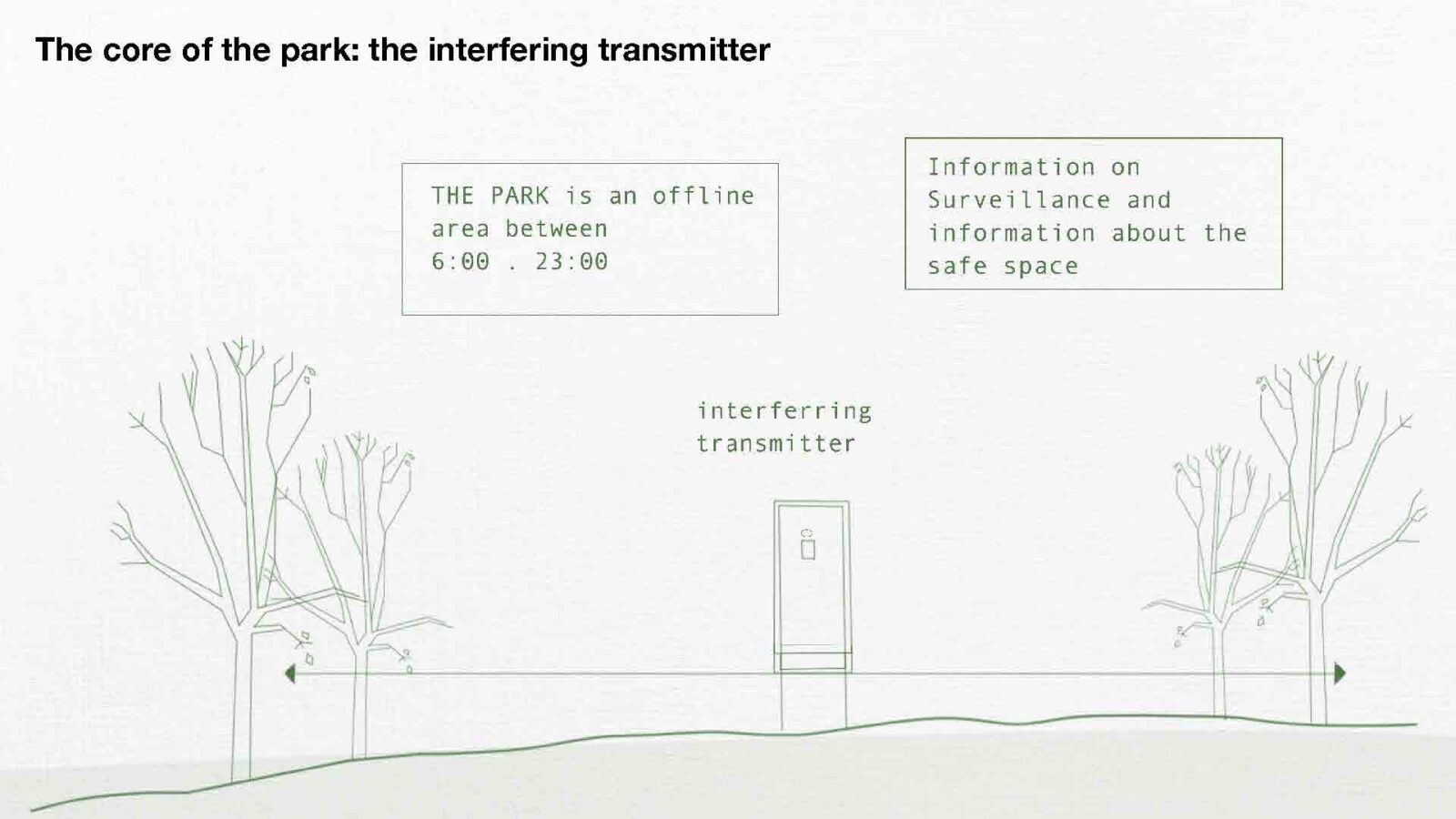

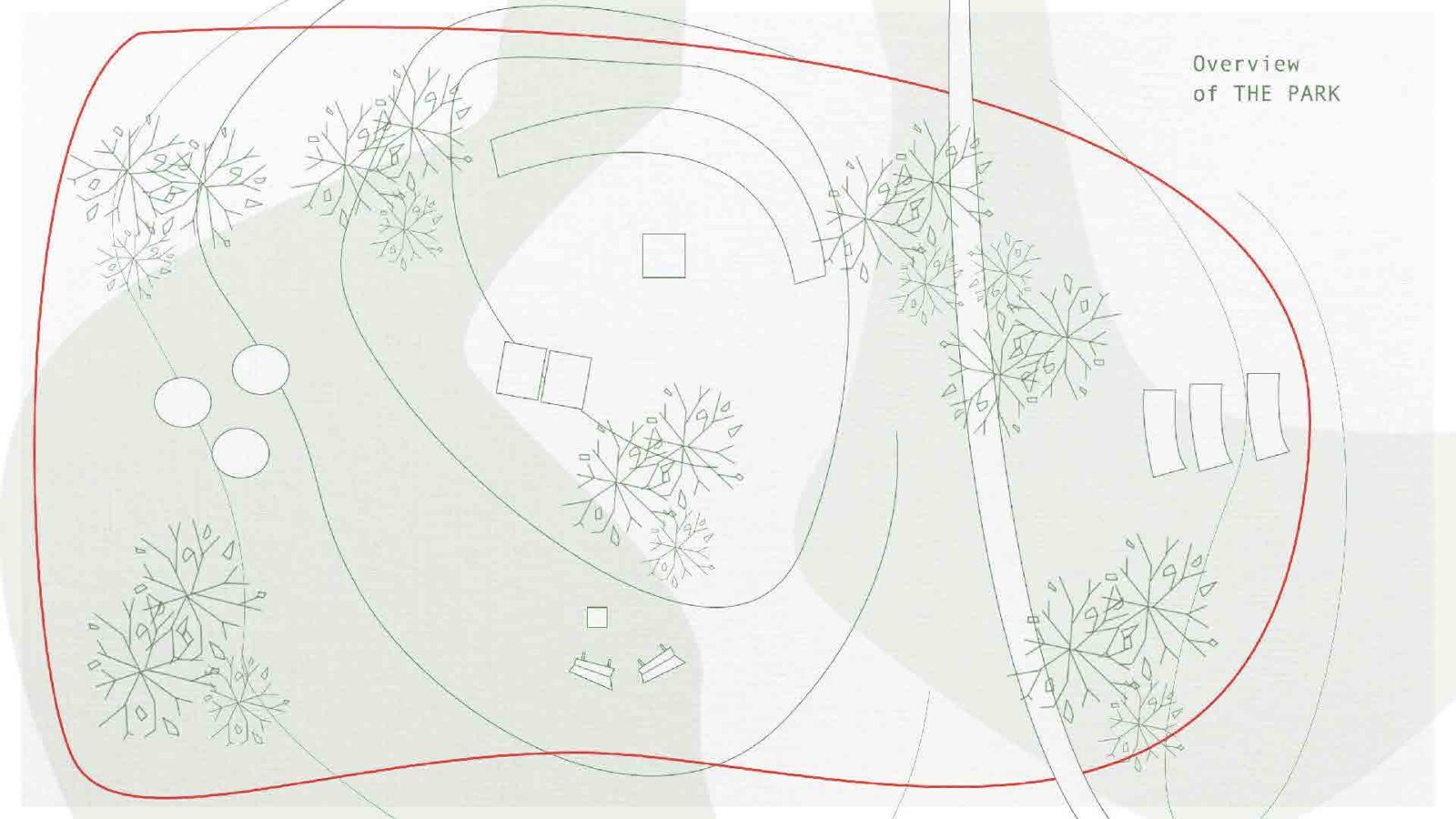

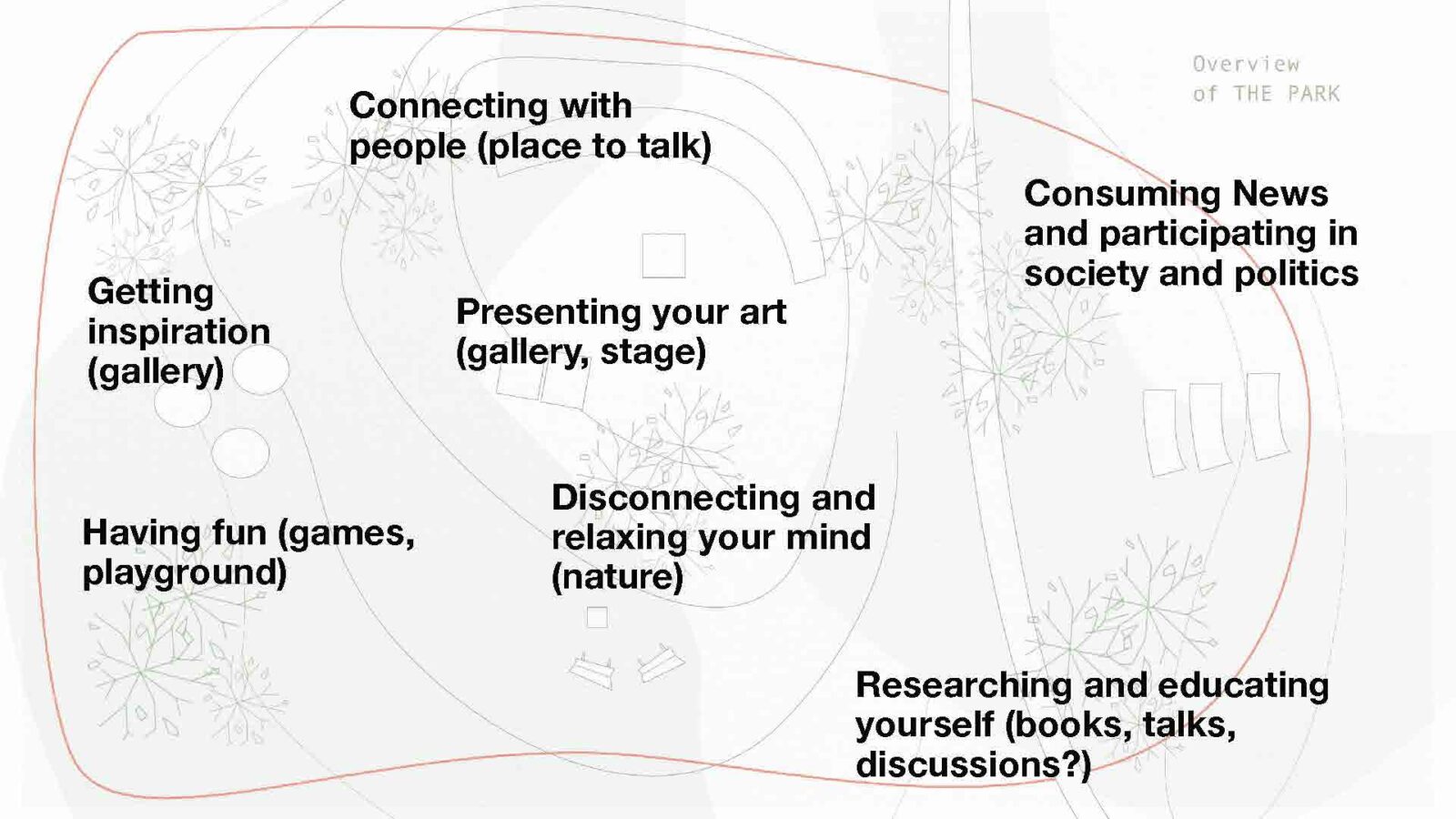

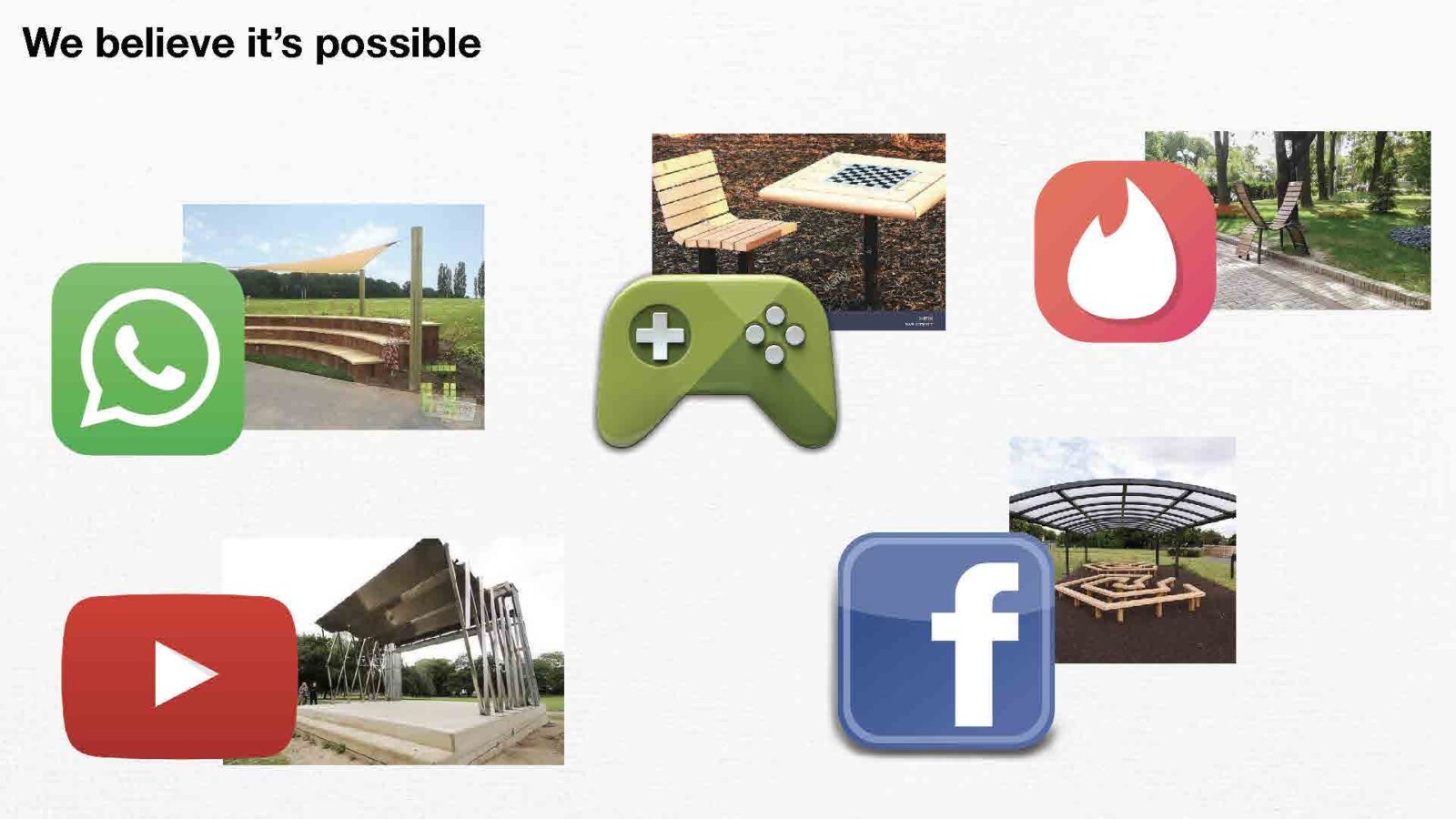

The Park gives you the Ultimate Analogue Experience. The core of The Park is the interfering transmitter. The Park is free to use, cheap and easy to set up and inclusive for all ages, all gender, all classes etc.. It is located in the city center and has little infrastructure. It is sustainable and open to the public, yet private, since no data is collected nor shared. The Park emphasises the importance of socializing in the offline world and it gives the choice to be offline.

Group 4: Hand-picked

Gabriele Schliwa, Andreas Weidauer

Issue

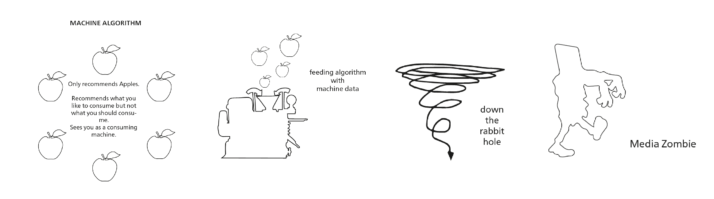

The group wants to challenge the idea of the “powerful algorithm,” thereby understanding what major role algorithms play in our daily life and give the power back to the people.

Traditional media experiences such as TV, radio, and cinema are substituted by

a variety of social media platforms with automated and personalised recommendations.

This way of media consumption and experience is fragmented and self-centered. Algorithms recommend things that are based on existing data feeds, reinforcing what exists. This leads to very individualized experiences within a data bubble and a lack of common language.

Fig 1: Comic on how algorithms work

Project

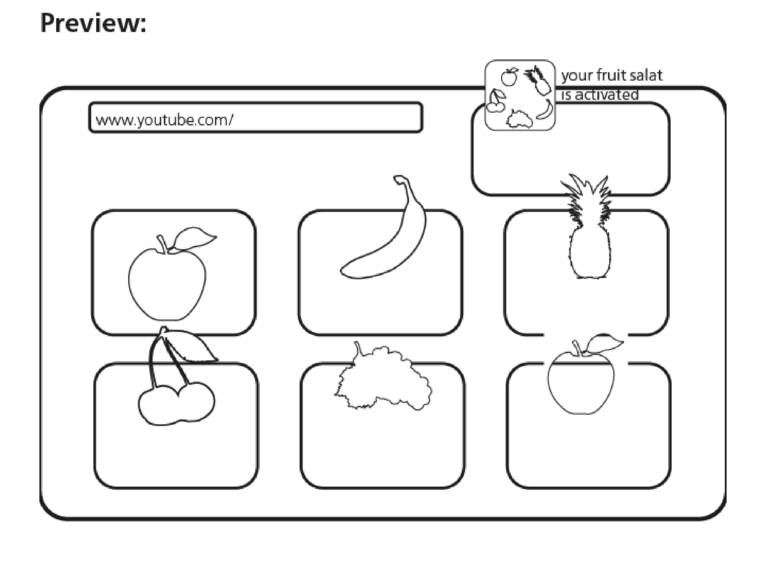

Hand-Picked App

The Hand-Picked App is a browser add-on that overlays machine recommendations with hand-picked recommendations from friends, interfering the automated data feed based on feeding human data. By using the app, the user can hack the downward spiral and turn self-centred screen time into collective time.